| Place: | Conference Management Room Lobby, B1F |

| Hours: | Wednesday, December 3 13:00-17:00 Thursday, December 4 12:30-16:30 Friday, December 5 10:00-14:00 |

| December 3 13:00-17:00, Conference Management Room Lobby, B1F |

||

| IDEMO-1 | FLOAT Platform System for Aero Signage and Spatial Visual Effects Karin Ikeda1, Kunio Sakamoto1 1. Konan University (Japan) Related IDW ’25 paper: PRJp1-1 |

|

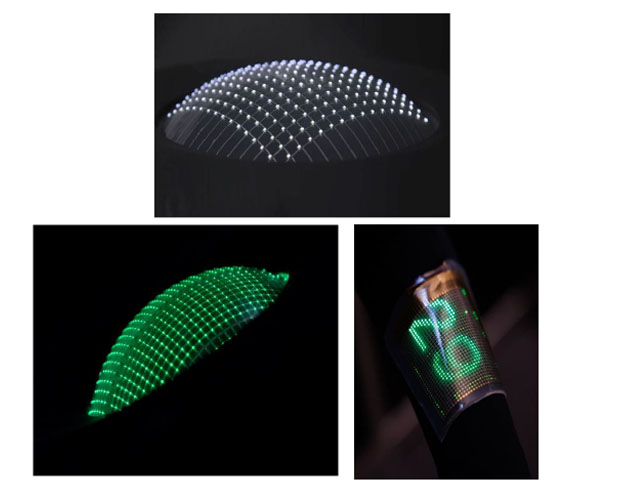

| The authors have proposed “flying 3D display units” as a new approach and researched floating 3D images which are in the room and above the corridor or the street. We show ‘FLOAT’ platform system using hemisphere and floating volumetric objects for projection mappings which are arranged and floating in the air. We will demonstrate four display units at I-Demo session. One is a flying ball screen for a projective display use or an advertisement use. The other is a hemisphere FLOAT platform for loading various optical elements or 3D balloon object. Moreover we show a miniature model for stage effect and eye-catchers for marketing using our proposed FLOAT platforms. | ||

| IDEMO-2 | Additional Eye-Catching Units for Aerial Signage Display Anna Kamo1, Kunio Sakamoto1 1. Konan University (Japan) Related IDW ’25 paper: INPp1/VHFp3-1 |

|

| The authors have researched floating 3D images which are above the corridor or street, and we hit on the question; Can ones notice what they haven’t recognized yet? We show methods to catch ones’ attentions using air blowing, smell delivery and air heating and cooling units in our ‘Mirage:’ aerial display system. We will demonstrate three display units at I-Demo session. One is ‘Mirage:’ display system that is the main part of an aerial display. The others are additional units for our proposed ‘Mirage:’ display unit. We will demonstrate and show eye-catching methods using air blow. Moreover we show smell delivery system for stimulating their appetites by smell from a viewpoint of marketing using same air blow system. | ||

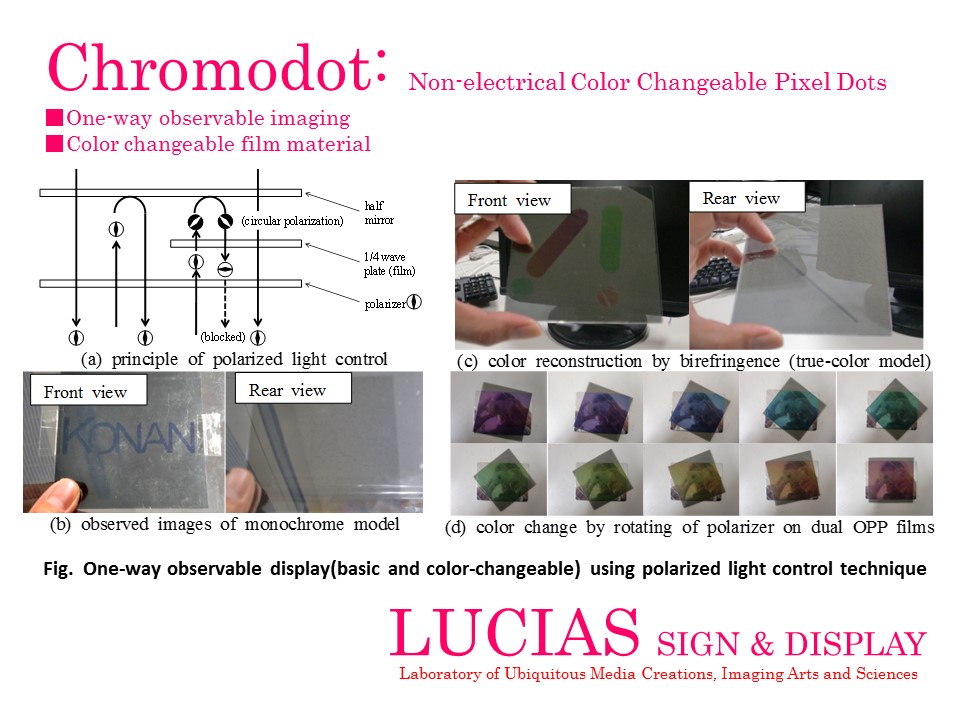

| IDEMO-3 | Variable POP Display Which Enables to Change Apparent Shape on Frame Design Kunio Sakamoto1, Anna Kamo1, Karin Ikeda1 1. Konan University (Japan) Related IDW ’25 paper: EPp1-2 |

|

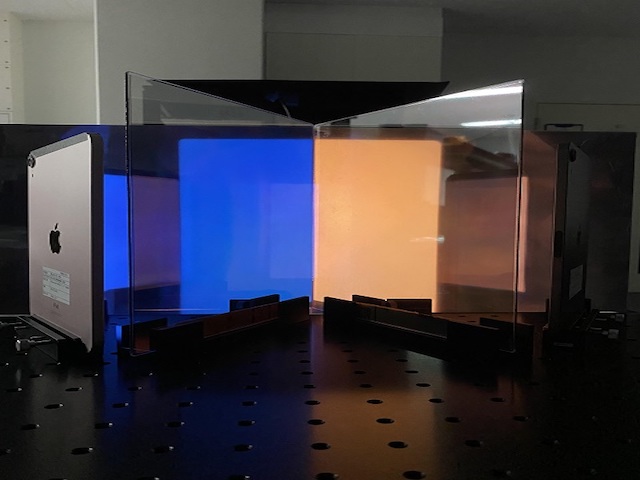

| POP cards are useful for eye catchings of customers but they are not reusable because of nonrecyclable design. The authors have researched variable imaging board system for reproducting various shapes. We show variable transparent POP display using a polarization control technique and coloring technology. We will demonstrate two types of POP display units (opaque and transparent board), image switching technologies, color generating technologies and polarization control techniques and at I-Demo session. If visual effects using an aerial signage unit and smell displaying technology will be embedded, our proposed POP display would be more useful in the future. | ||

| IDEMO-4 | Invited UserDetect - Driver Passenger Touch Discrimination Hirohisa Onishi1, John Shanley2 1. Microchip Technology Japan K.K. (Japan), 2. Microchip Technology, Inc. (USA) Related IDW ’25 paper: INP3-3 |

|

| When used in an automotive environment the touchscreen allows both driver and passenger to select the same items on the panel. User detection provides the option to limit the touch panel from taking input from one or either of the operators. This provided an extra layer of safety and security for functionality such as navigation input, while the vehicle is in motion, or for other inputs such as hazard warning on or off. Indeed, as automotive moves towards hands free driving, and fully automated driving systems, it becomes more essential to have this kind of user detection to ensure that critical systems are operated by the correct user. | ||

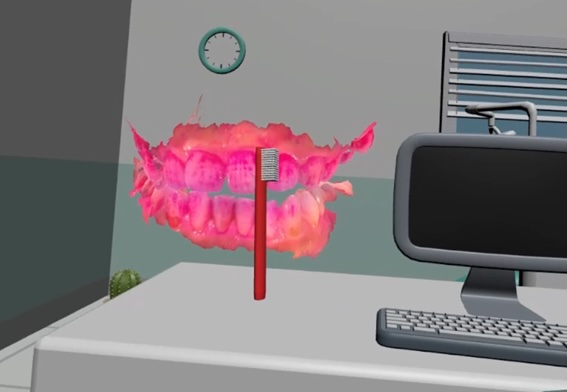

| IDEMO-5 | Experiencing Symptoms of Tooth Decay Using Cross-Modal Perception Naoya Tanigaki1, Takeshi Watanabe1, Keiichiro Watanabe1, Kenji Terada1 1. Tokushima University (Japan) Related IDW ’25 paper: 3D8-2 |

|

| Tooth brushing is important to prevent dental caries. However, it is difficult to maintain motivation. If there is a tool to experience just how painful tooth decay is, it may be useful to motivate to brush teeth to prevent dental caries. Cross-modal perception refers to the sensations created by the interaction of various human senses. We attempted to induce tooth decay symptoms by visual and auditory information from devices such as autostereoscopic display, headphone, and head mount display. We will demonstrate the experience of tooth pain by cross-modal perception (Figure 1). | ||

| IDEMO-6 | Zero-Shot Noise2Noise Denoising for Underwater Image Enhancement Yi-Tsung Pan1 1. National Taiwan University of Science and Technology (Taiwan) Related IDW ’25 paper: IST4-2 |

|

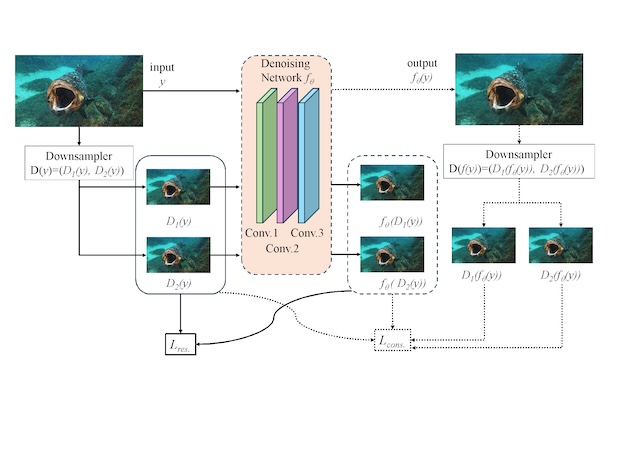

| Underwater images suffer from complex noise patterns due to light attenuation, scattering, and sensor limitations. And it's difficult to have a clean-noisy image pairs at the same time. How to denoise underwater images without high computing power, or a known noise model, or have a sufficient number of clear quality images is the research goal. In this research, a simple 3-layer network is demonstrated. Modified from Mansour, Y. et. al. , which can quickly achieve improved quality images without any pre-training data or knowledge of the noise distribution, and remove noise at a lower computational cost than conventional research papers. | ||

| IDEMO-7 | A Flat Panel Detector with Computational Pixel Design Enabling High SNR and HDR Haorong Xie1, Weikang Yan1, Kai Wang1 1. Sun Yat-Sen University (China) Related IDW ’25 paper: IST2-1 |

|

| Previously, a 75µm² 2T1D 256×256 array was trialed, proving its logarithmic exponential operation, high gain and wide dynamic range for low-dose X-ray static imaging, but no dynamic performance due to small scale. Now, a 300µm² 2T1D 0816 512×256 array has confirmed higher gain, plus no tailing and high refresh rate, fitting indirect low-dose online rapid dynamic X-ray detection. Simulations show 2T pixels (removing PD for X-ray direct-conversion materials) work for X-ray direct-conversion, with 3×10âµ single-pixel gain and 0.1MHz max frequency, boasting great potential in direct low-dose online rapid dynamic X-ray detection. | ||

| IDEMO-8 | 3D-OLED Display Peter Levermore1, Rajan Giller1, Ion Forbes1, Daping Chu1, Donal Bradley1 1. Excyton Limited (UK) Related IDW ’25 paper: OLED7-3L |

|

| Excyton will showcase a prototype 8 x 8 PM-OLED display with vertically stacked red, green and blue sub-pixels. Each sub-pixel is independently controllable. The display renders the sRGB colour gamut with 8 bits-per colour and has peak brightness of 180 cd/m2 at the D65 white point with less than 5% brightness variation across all pixels. To the best of our knowledge, this is the first demonstration of an OLED display with vertically stacked sub-pixels. This pixel design has potential to increase display lifetime, brightness, resolution and efficiency, as well as enabling full-colour displays at low resolution. | ||

| IDEMO-9 | Improvement Techniques for Ghosting in Virtual Reality (Pancake Optics) Daisuke Hayashi1, Shota Suruga1 1. Nitto Denko Corporation (Japan) |

|

| We will demonstrate our innovative improvement techniques for ghosting in Virtual Reality (VR) Pancake optics. Ghosting is one of the major issues in VR. This improvement can be achieved by polymer type HWP and QWP using Z-axis stretching technology. The technology is a technique for stretching film in the thickness direction. The NZ coefficient (= (nx-nz)/(nx-ny)) of these films has been adjusted to 0.5. (nx,ny,nz; Refractive indices in the in-plane and thickness directions) You can experience a demonstration comparing the ghosting differences between conventional technology and our technology using the lens assembly. | ||

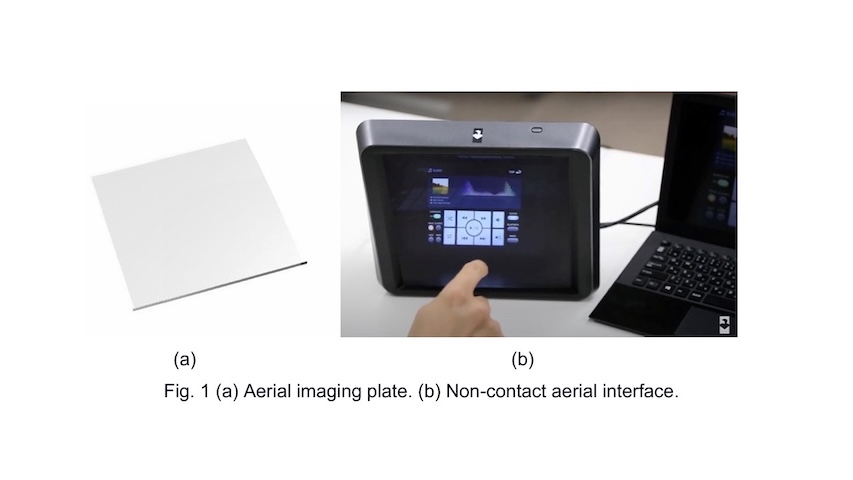

| IDEMO-10 | Image Quality Improvement Method for Table-top Reflective Aerial Images by Tilting the Display Surface Ayami Hoshi1, Motohiro Makiguchi1, Naoto Abe1 1. NTT Inc. (Japan) |

|

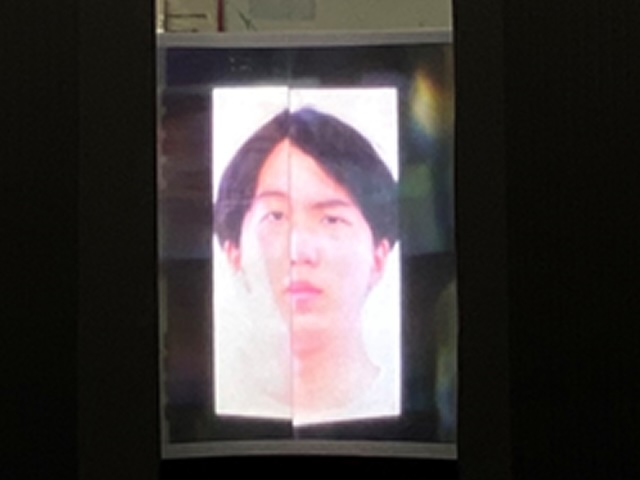

| There is an aerial imaging optical system that projects a virtual character onto a table and allows users to view it from above. We propose a method for this optical system that efficiently utilizes displays and optical elements to increase the display size of aerial images while maintaining the vertical viewing range and improving the luminance and image quality of the aerial image compared to conventional methods. In a demonstration, the virtual character was observed to be of such high quality that even the facial expressions could be seen. | ||

| IDEMO-11 | Full-Color Full-Parallax Computer-Generated Hologram Reconstructing Real Object of Any Size Kengo Tada1, Hirohito Nishi1, Kyoji Matsushima1 1. Kansai University (Japan) |

|

| We will demonstrate full-parallax high-definition computer-generated holograms (FPHD-CGH) reconstructing full-color 3D images of real objects (Fig.1(a)). The FPHD-CGH, whose size is more than 10cm×10cm, is composed of more than 34 billion pixels and RGB color filters. The FPHD-CGH is calculated using a technique based on free-viewpoint images generated by machine learning technologies such as NeRF. As a result, the FPHD-CGH can reconstruct physical objects of any size. By illuminating the FPHD-CGH with an RGB laser (Fig.1(b)), viewers can see the 3D image of a large real object with very strong sensation of depth. | ||

| IDEMO-12 | Proposal of Hyper-Realistic Moderate-Dynamic-Range (hMDR) Reproduction Technique from Twilight Ultra-HDR Environments Suitable for Reflective Displays Like E-paper Sakuichi Ohtsuka1 1. International College of Technology, Kanazawa (Japan) Related IDW ’25 paper: DES4-4L |

|

| Reflective displays (RDs) are quite ecological because of their low power consumption compared with emissive displays. However, the week point was limitation of dynamic range. We demonstrate our newly developed MDR image generation technique suitable for RDs, where the dynamic range is able to be compressed to only approx. 30:1 from HDR images with approx. 10,000:1, that employing only Global-Tone-Mapping (GTM). The dynamic range of hMDR is also 1/3rd compared with conventional SDR or HDR-tone, that employing Local-Tone-Mapping (LTM), where their dynamic range is approx. 100:1. You will be able to observe printed images on A3 paper. | ||

| IDEMO-13 | Let's Experience a Novel Maxwellian Optics Shuri Futamura1, Tatsuzi Tokiwa1 1. Hiroshima City University (Japan) |

|

| This demonstration presents a novel Maxwellian optical system that combines a Transmissive Mirror Device (TMD) with our proposed Spherical Multi-Pinhole (SMP). The system eliminates the image loss that occurs during eye rotation in conventional setups, allowing stable and clear image presentation independent of gaze direction. Moreover, owing to the characteristics of Maxwellian viewing, participants with refractive errors such as myopia or hyperopia can perceive sharp retinal images without the need for corrective lenses. The proposed system achieves a wide field of view and shows strong potential for application in next-generation VR/AR display technologies. | ||

| December 4 12:30-16:30, Conference Management Room Lobby, B1F |

||

| IDEMO-14 | Wide Viewing Zone Angle Hologram Using 1-µm-Pixel-Pitch Ferroelectric Liquid Crystal Cell Yuta Yamaguchi1, Yoshitomo Isomae2, Mikio Oka2 1. Japan Broadcasting Corporation (NHK) (Japan), 2. Sony Semiconductor Solutions Corporation (Japan) Related IDW ’25 paper: 3D1-1 |

|

| The optical reconstruction of a static, computer-generated 3D holographic image comprising 9,600 × 9,600 pixels with a pixel pitch of 1 µm × 1 µm is demonstrated using a fabricated ferroelectric liquid crystal (FLC) cell. Viewers can observe the reconstructed 3D image in front of the FLC cell, and motion parallax is observed as the observation position moves through this wide viewing zone. The cell is driven by low voltage (±0.55 V0-p) and high frequency (1,080 Hz), and the cell is illuminated by a green LED. | ||

| IDEMO-15 | Tooth Brush Training Using Virtual Reality Takeshi Watanabe1, Kotone Yamashita1, Yoshitaka Suzuki1, Kenji Yamamoto1, Yuki Ishihara1 1. Tokushima University (Japan) Related IDW ’25 paper: 3D8-1 |

|

| Brushing teeth every day is tough work. To help maintain motivation for tooth brushing, we developed tailor-made tooth brushing training. In the VR space, your own teeth appear right before your eyes, allowing you to learn their shape and where plaque tends to accumulate. You can clearly see the shape of your back teeth, which are difficult to view in a mirror. Furthermore, you can brush your own teeth before your eyes in the VR space (Figure 1). Using this tool may foster a sense of attachment to your own teeth, making you want to brush them every day. | ||

| IDEMO-16 | DEMO of "Compact VR Optical System Using a Lens Array" Kento Matsuo1, Haozhe Cui1, Chuanyu Ma1, Reiji Hattori1 1. Kyushu University (Japan) Related IDW ’25 paper: 3D10-4 |

|

| We will demonstrate a prototype VR goggle using a new VR optical system, to be presented in our talk, “Compact VR Optical System Using a Lens Array” (Session: 3D10). Attendees can experience the prototype firsthand. This system utilizes a lens array to achieve a shorter focal length, a wider field of view, and higher light efficiency than conventional pancake lenses. Through this demo, we will show that our optical system enables significantly thinner VR goggles. The prototype on display has an optical thickness of 14.6mm, and it is theoretically possible to make it even thinner. | ||

| IDEMO-17 | High-Transmittance Surface-Relief Hologram Ryo Higashida1 1. Japan Broadcasting Corporation (NHK) (Japan) Related IDW ’25 paper: 3D2-2 |

|

| We will demonstrate the reconstruction of surface-relief holograms fabricated by laser lithography and reactive ion etching on glass substrates. By encoding complex amplitude information, the transmittance of the holograms can be precisely controlled, allowing the physical object behind the hologram to remain visible. This enables the simultaneous viewing of photorealistic holographic three-dimensional images and the physical object, opening new possibilities for three-dimensional visual expression. | ||

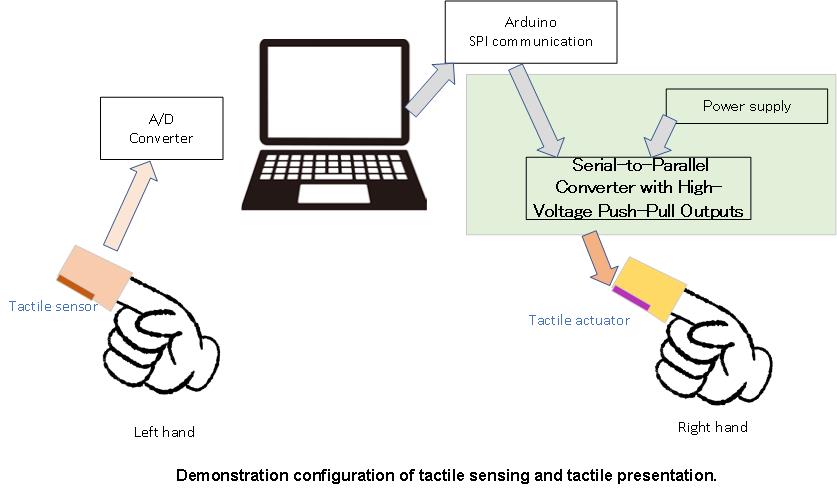

| IDEMO-18 | Invited Tactile Sensing for Remote Perception Alexander Schmitz1 1. XELA Robotics (Japan) Related IDW ’25 paper: INP1/VHF1-3 |

|

| XELA Robotics makes tactile sensors (uSkin). The sensors are small and provide distributed 3-axis force measurements (normal and shear forces). The force resolution is 0.1 gf, and at the same time the sensors are soft and robust. We investigate possibilities for remote perception and haptic feedback with uSkin. | ||

| IDEMO-19 | Optical Design for Super-Multiview Integral 3D Display Hayato Watanabe1 1. Japan Broadcasting Corporation (NHK) (Japan) Related IDW ’25 paper: 3D10-1 |

|

| We demonstrate a super-multiview (SMV) integral three-dimensional (3D) display aimed at reducing the vergence-accommodation conflict. The 3D display consists of only a display device and a lens array. By optimizing the arrangement of the lens array and elemental image array, the density of the light rays forming a 3D image is increased in exchange for narrowing the viewing zone to only the periphery of the pupils. We also demonstrate a conventional integral 3D display that provides a wide continuous viewing zone covering both pupils. You can experience the difference between the conventional and SMV integral 3D displays. | ||

| IDEMO-20 | Edge-Optimized Real-Time Sign Language Recognition Using YOLOv5, Signformer, and Physical Neural Networks Yu-Ju Cheng1, Yu-Wu Wang1, 1. National Changhua University of Education (Taiwan) Related IDW ’25 paper: INPp1/VHFp3-6 |

|

| This demonstration presents a real-time American Sign Language recognition system implemented on a laptop using YOLOv5 for gesture detection. The system achieves high recognition accuracy and provides instant visual feedback. Future work will integrate Signformer for translation and explore physical neural networks (PNN) for efficient on-device computation. | ||

| IDEMO-21 | High-Resolution Aerial Display with a Wide Field of View Using Combined Lens-Enhanced Aerial Imaging by Retro-Reflection Kazuaki Takiyama1, Shiro Suyama1, Hirotsugu Yamamoto1 1. Utsunomiya University (Japan) Related IDW ’25 paper: FMC7-2 |

|

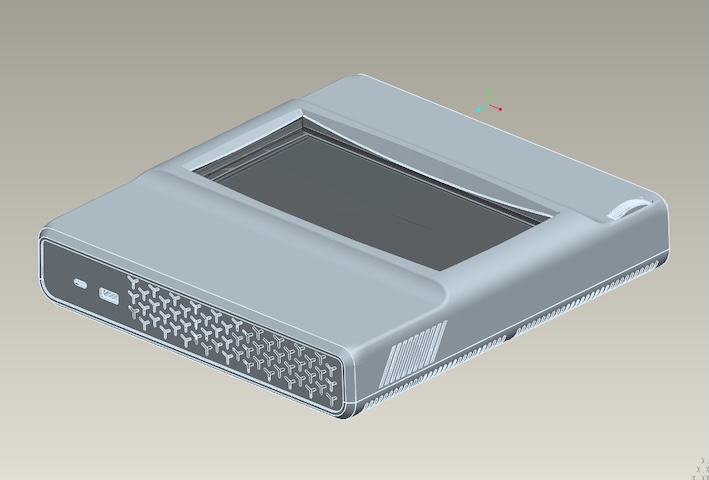

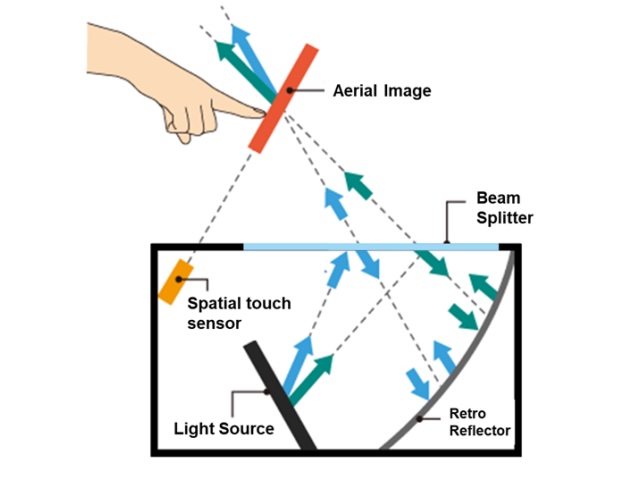

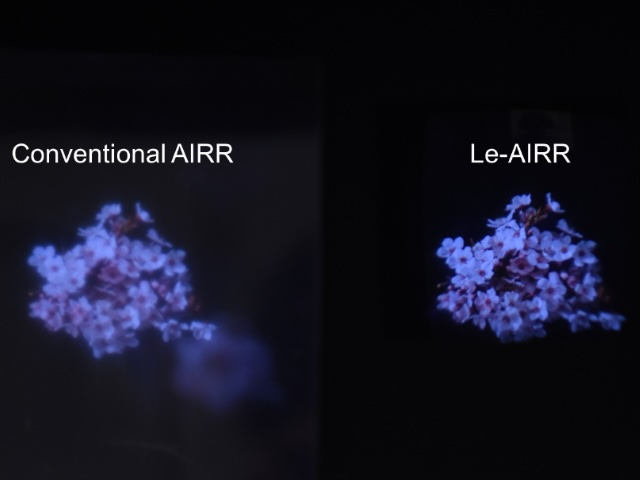

| We will demonstrate a high-resolution aerial display system with a wide field of view (FOV) by use of lens-enhanced aerial imaging by retro-reflection (LeAIRR). Aerial images of a conventional AIRR are significantly blurred. The LeAIRR with a combination lens forms a high-resolution aerial image with a 30-degree FOV. Compared to the LeAIRR with a single lens, the proposed LeAIRR with the combination lens realizes a wider FOV and a compact optical system. This demo system uses conventional AIRR and the proposed LeAIRR to form aerial images. The observer can compare the resolution of both aerial images. | ||

| IDEMO-22 | Holographic Contact Lens Display Yuto Hayashi1, Fuma Hirata1 1. Tokyo University of Agriculture and Technology (Japan) Related IDW ’25 paper: 3D1-4L |

|

| We will demonstrate an innovative AR system that displays arbitrary still image using holography. This demo system consists of a laser light source and a hologram placed on the surface of a contact lens. When the laser light enters the hologram, the recorded image is projected. Since the image is displayed at a distance from the eye, users can focus on it even though the contact lens is positioned close to the eye. In the future, we plan to use electronic holograms to switch images and display videos. | ||

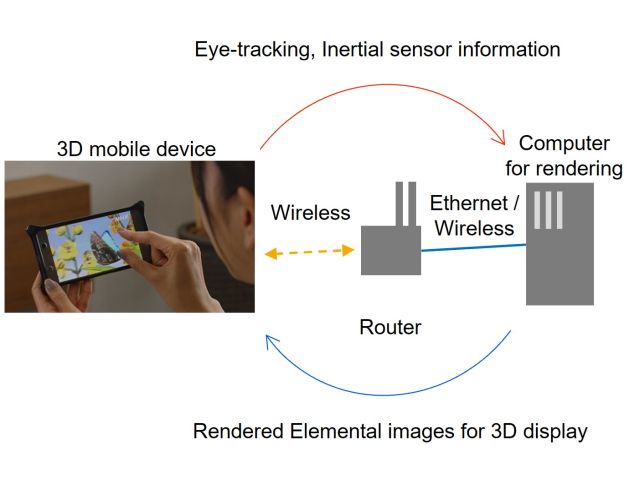

| IDEMO-23 | Content Control in Eye-Tracking Light Field Displays Using Network Technology Hisayuki Sasaki1, Hayato Watanabe1 1. NHK Foundation (Japan) Related IDW ’25 paper: 3D8-4L |

|

| We present a content control system for eye-tracking light field displays, designed to support dynamic and context-aware 3D visualization. Content switching is achieved by applying remote control to a download-based architecture with local rendering, which is considered a promising approach for future deployment. The demonstration includes two entertainment scenarios and one emergency response application, highlighting the system’s adaptability. While users are engaged with entertainment content, the broadcasting station can seamlessly override it with urgent alerts. This approach enhances the practicality of light field displays in public and personal environments, offering both immersive experiences and responsive communication capabilities. | ||

| IDEMO-24 | Two-Layer Aerial Image Display Takehiro Kaneko1, Yasuhiro Takaki1 1. Tokyo University of Agriculture and Technology (Japan) Related IDW ’25 paper: 3Dp1-17L |

|

| A two-layer aerial image display will be demonstrated. The conventional aerial display systems require considerable depth and the two-image generation requires two aerial display systems. The new aerial display that we developed can produce a front image (aerial image) and a rear image (light source array) with a flat form factor by use of polarization optical elements. The proposed system is suitable for combining with large LED display panels so that it will be used for outdoor advertisement and ultra large-screen displays at concert halls and stadiums. A small-scale verification system using 8×7 LEDs will be shown. | ||

| IDEMO-25 | Stereo Video Generation by Generative AI from Single Video Taemyong Shin1, Kyosuke Yanagida1, Takafumi Koike1,2 1. Hosei University (Japan), 2. RealImage Inc. (Japan) |

|

| We have developed a technology that automatically generates high-quality 3D stereo images from 2D video. The automatic generation technique estimates depth from the 2D video and warps the video based on the estimated depth. It then inpaints missing parts of the generated 3D stereo images using a diffusion model. This demonstration displays the 3D stereo images generated by the proposed method on a tablet-sized autostereoscopic 3D display. The exhibition allows comparison between the original 2D video and the generated 3D stereo images. | ||

| December 5 10:00-14:00, Conference Management Room Lobby, B1F |

||

| IDEMO-26 | Micro LED-Driven User Interface and Experience Design Framework for Next-Generation Smartwatches Yu-Qi Tang1, Yan-Jie Cao1, Hung-Pin Hsu1, Yi-Ping Wang1 1. Ming Chi University of Technology (Taiwan) |

|

| This study explores the potential of Micro LED technology in next generation smartwatches. Highlighting its advantages of high brightness, low power consumption, wide color gamut, and long lifetime, the research proposes a design framework that integrates vibrant color schemes to enhance visual quality and user experience. | ||

| IDEMO-27 | Click Display: Novel Steering Wheel for Automotive Cockpit HMI Yasuhiro Sugita1 1. Sharp Corporation (Japan) |

|

| The 5.5 inch prototype using high-sensitive force touch sensor is novel steering wheel with on-screen buttons. Our proposed technology "Click Display" is a display with a click-like operation feeling and uses three technologies, a highly sensitive force touch sensor, 3D shape cover and haptic feedback in combination with a display. Combined with the novel GUI, a single control button on the screen enables to perform multiple functions such as audio, map, light, adaptive cruise control and others. By replacing conventional buttons with our on-screen multi-functional button, many mechanical control buttons can be reduced. Furthermore, our prototype enables advanced designs. | ||

| IDEMO-28 | Coatable Light-Dimming Film Using Functional Nanoparticle Dispersion Ink Michiaki Fukui1, Kazuki Tajima2 1. Hayashi Telempu Corporation (Japan), 2. AIST (Japan) |

|

| Windows in homes and automobiles are used to observe the outside, but they also allow significant sunlight to enter the interior, contributing to increased air conditioning loads. At our exhibition booth, we will display large samples of our coatable light-dimming film, which utilizes a functional nanoparticle dispersion ink developed to control sunlight entering through windows. Additionally, we will introduce the black-toned light-dimming film currently under development. | ||

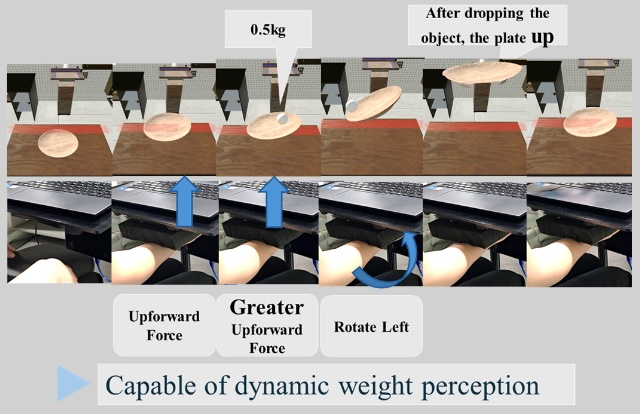

| IDEMO-29 | A Weight Change Perception with Hand Semi-Constrained Momoka Sugahara1, Wataru Wakita1, 1. Hiroshima City University (Japan) |

|

| We will demonstrate a system that allows users to perceive weight changes when lifting and dropping objects in VR space. The demo system is structured as a force input-visual presentation position output system. Users apply force from their palms to load cells to lift and drop objects, and the system uses sensor values to control the movement of the hand object in the VR space. The images are then output to a monitor, allowing users to perceive the weight. In this way, users can experience dynamic changes in weight in real time. | ||

| IDEMO-30 | Demonstration of Field Curvature Aberration Correction Using Curved CMOS Image Sensors Masahide Goto1, Shigeyuki Imura1 1. Japan Broadcasting Corporation (NHK) (Japan) |

|

| We demonstrate thin and bendable CMOS image sensors (CISs). Using a silicon-on-insulator layer transfer technology, the world's first thin and bendable CIS with a thickness of 11 µm was developed. In this demonstration, the CIS is shaped into a concave form, part of a sphere, to match the image formation plane created by a lens, reducing peripheral blurring due to field curvature aberrations. The developed curved color CIS in QVGA format (320 × 240 pixels) significantly corrects peripheral blurring using a single lens, compared to a flat CIS, confirming the feasibility of next-generation wide-angle, high-quality imaging, and compact camera systems. | ||

| IDEMO-31 | Resin Electrochromic(EC) Dimming Glasses and Actual Samples of 3D Thermoforming Process for Prescription EC Lens Takuya Ooyama1, Masahiro Kitamura1 1. Sumitomo Bakelite Co., Ltd. (Japan) Related IDW ’25 paper: EP5-1, EP5-2 |

|

| We have realized Electrochromic (EC) sheet for prescription glasses which is the world's first resin EC consumer device. EC glasses can freely dim according to the surrounding brightness and situation. The developed EC sheet has high transmittance, wide dynamic range, low power consumption, safety and light weight and so on. We will exhibit 1) actual working durable resin EC glasses and 2) actual samples of 3D thermoforming process for prescription EC lens. You can wear the actual EC glasses and experience the speed of EC coloring / decoloring according to the light / dark adaptation of your eyes. | ||

| IDEMO-32 | Invited Pilot ID - User Recognition for Multi-User Touch Interfaces Iyad Nasrallah1, Kaori Sakai2 1. TouchNetix Ltd. (UK), 2. TouchNetix Ltd. (Korea) Related IDW ’25 paper: INP3-2 |

|

| Discover how Pilot ID technology is redefining multi-user touch environments. Our demo will show how Pilot ID can distinguish between different users of a touch screen or surface, enabling safety-enhancing and dynamic adjustments to HMI functions. For example, the system can implement user specific features within an automotive environment or enable menu simplification by recognising which user is interacting with the HMI. Perfect for automotive, industrial, and consumer environments, Pilot ID offers a reliable, cost-effective solution that can be easily integrated alongside all other touch and force solutions. | ||

| IDEMO-33 | Developing of Ultra Low Refractive Index Material for Light Guide of AR glass Keisuke Sato1, Daisuke Hattori1 1. Nitto Denko Corporation (Japan) |

|

| AR-glass is expected to be a next-generation device. Especially, optical waveguide AR-glass which has the properties of lightweight and compact is expected to become mainstream. In AR-glass, Air-Gap part adjacent to the waveguide is essential for keeping the light guide in the waveguide, but concurrently it embraces some issues due to only the Air-Gap. In this time, we developed Ultra-low refractive index layer which is composed by high porosity material. It is effective as a part of the Gap-less type AR-glass by using it instead of Air-Gap. Through this demonstration, we explain about the importance of ULRI material in AR-glass. | ||

| IDEMO-34 | VR-Based Simulator for Aerial Images by Optical See-Through AIRR Ryota Yamada1, Hiroki Takatsuka1, Shiro Suyama1, Hirotsugu Yamamoto1 1. Utsunomiya University (Japan) Related IDW ’25 paper: 3D4-4 |

|

| We demonstrate a VR simulator that reproduces the aerial image generated by an optical see-through AIRR (Aerial Imaging by Retro-Reflection) system. The simulator automatically determines the location of the aerial image by use of the positions of the retro-reflector and the light source. During rendering, pixels outside the computed viewing area are excluded to reproduce the appearance of the aerial image in real time according to the observer’s head motion. Using the passthrough function of a VR headset, the virtual aerial image can be overlaid on the real environment for comparative analysis | ||

| IDEMO-35 | Extension of Floating Distance and Aerial Images by Use of Convex-Mirror in the AIRR Akito Fukuda1, Sotaro Kaneko1, Kazuaki Takiyama1, Shiro Suyama1, Hirotsugu Yamamoto1 1. Utsunomiya University (Japan) Related IDW ’25 paper: FMCp1-19 |

|

| We propose an aerial Imaging by Retro-reflection (AIRR) optical system with a convex mirror to extend the floating distance and increase the size of aerial images. The floating distance and the size of the aerial images can be expanded by changing the position of the optical virtual image by the AIRR optical system behind the convex mirror. This demo system uses proposed AIRR system to form enlarged aerial images. The observer can compare the size of the aerial image by viewing at the presented image and aerial image formed. | ||

| IDEMO-36 | Large-Scale Full-Color Stacked Computer-Generated Volume Hologram Created Using Tiling Contact-Copy Tomoya Yamamoto1, Hirohito Nishi1, Kyoji Matsushima1 1. Kansai University (Japan) |

|

| We will demonstrate a full-color full-parallax deep 3D image reconstructed by stacking three computer-generated volume hologram (CGVH) with a size of 18cm×18cm (Fig.1(a)). The CGVHs are fabricated by tiling contact-copy, where three computer-generated holograms (CGH) printed using laser lithography are transferred to photopolymers using an 8 × 8 tiling process. Each master CGH is composed of more than 100 billion pixels and provides a viewing angle of over 33°. High-quality full-color 3D images are clearly observed by white LED illumination with strong sensation of depth without any boundary of tiles (Fig.1(b)). | ||

| IDEMO-37 | Full-Color Holographic AR Effect Display Using Stacked Computer-Generated Volume Holograms Shion Okai1, Hirohito Nishi1, Kyoji Matsushima1 1. Kansai University (Japan) |

|

| We will demonstrate a holographic AR effect (HARE) display, where three transparent computer-generated volume holograms (CGVH) placed in front of the physical object generate full-color 3D images around the object (Fig.1(a)). Each CGVH having a size of over 10cm×10cm is created from a full-parallax computer-generated hologram (CGH) with approximately 34 billion pixels. Because the CGVHs produce not only parallax but also accurate focus adjustment, viewers can see color 3D images blended with physical objects seamlessly (Fig.1(b)). In addition, HARE produced behind the physical object is natural because of proper occlusion processing. | ||