Five Senses and Cross-Modal Technology

As a future trend in VR and AR technologies, we focused on the human five senses and the cross-modality between vision, hearing, taste, smell and touch. Vision has been the main interest in the IDW, but it also greatly affects other senses as a cross-modal effect. With the latest research on the application of human senses, this event will give you some hints for considering the future of display technology.

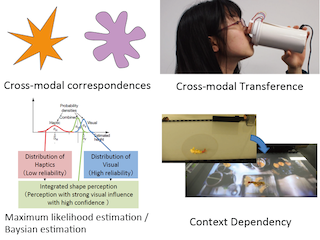

Cross-modal interfaces that utilize multisensory interactions are expected to be an efficient method for presenting sensory information. Since there are multiple possible mechanisms for multisensory interactions to occur, it is difficult to accurately grasp the effectiveness and limitations of cross-modal interfaces without a proper understanding of the mechanisms. Nevertheless, several mechanisms have been conflated and discussed in prior research, leading to confusion in understanding the potential of cross-modal interfaces. In this talk, I will introduce the following four mechanisms by which multisensory interactions occur: cross-modal correspondences, cross-modal transference, maximum likelihood estimation / Baysian estimation and context dependency, and discuss the characteristics of the cross-modal interfaces that utilize each of these mechanisms.

In this presentation, we will introduce a method to provide users with past experiences and real-time experiences of remote locations by presenting information to the five senses. This multisensory display (the FiveStar) is equipped with displays for vestibular, proprioceptive (kinesthesia), tactile, and olfactory sensations in addition to audiovisual displays, enabling users to recreate, or relive, past travel experiences. A robot or a person moving in a remote place, holding a camera system (the TwinCam) for omnidirectional stereoscopic viewing, transmits images in real time and presents them simultaneously with vestibular and proprioceptive sensations, creating a realistic experience as if the person were walking in the area. The presentation of the sensation of physical motion has the very important effect of suppressing virtual reality sickness. We are building a system that can provide these omnidirectional images to be shared in a metaverse gallery where users can freely select and experience them.

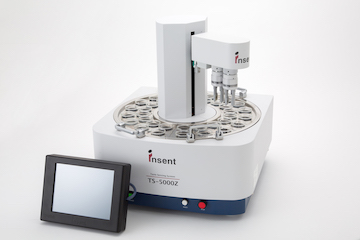

Sight and sound information can be transmitted to the distant place. It is because we have devices (display, speaker) to express the sight and hearing as well as the sensors (camera, microphone). Then how about the senses of taste and smell? These two senses receive chemical substances, and hence the circumstances become considerably complicated. Recently, however, such circumstances are much improved; the research and development of taste sensors (e-tongues) and smell sensors (e-noses) are made actively. The taste sensor developed in Japan was put to practical use 30 years ago, and then has been already used more than 600 throughout the world. Smell sensors are also developed because of the development of sensing materials and AI. Therefore, we are standing at the new age of making five-sense transmission possible. These sensing devices are explained in the lecture.

Cross-modal perception refers to perceptual interaction between two or more different sensory modalities. We already know that odors have a variety of cross-modal effects, and we use them in our daily lives such as perfume and aromatherapy. Do we have cross-modal interaction between olfaction and vision? Here, I will show one of my recent works about a cross-modal perception between olfaction and vision. From psychophysical experiments, we found that the smell of lemon makes us perceive the visual movement slower, on the other hand, the smell of vanilla makes us see it faster. Also, fMRI experiments revealed that activities in visual areas changed with olfactory stimuli: Lemon smell suppressed fMRI activities in the visual areas, and vanilla smell promoted them. These findings suggest that we can create a new visual experience with use of other sensory stimuli as well as development of display technology.

Display Night will be held at Fukuoka Sunpalace on Dec. 15, which is supported by Huawei Technologies Japan, and registration fee is free ! Eminent speakers will give in-person talks followed by direct communication, where drink and dinner are sufficiently served.

OLED Materials Current Status and Future Prospective, Especially for Blue

Jang Hyuk Kown (Kyung Hee University, Korea)

Blue OLED material technologies are evolving step by step to date. Among many blue material technologies, recently phosphorescent material has been actively researched due to short exciton decay time, good color purity, and long lifetime. In this talk, recently reported new blue phosphorescent material technologies will be discussed with comparison with TADF materials. Additionally, it will discuss about key methodologies to achieve long device lifetime in blue OLEDs.

Oxide TFTs with Higher Mobility

John Wager (Oregon State University, United States of America)

Next-generation oxide TFTs require higher mobility. Higher mobility amorphous oxide semiconductors have higher free electron concentrations. Employing them requires reducing channel layer thickness compared to a-IGZO TFTs. It is proposed that optimal TFT performance occurs when the channel layer is constrained to be thinner than 2.22 times the Debye length.

The participants limit is 200, don't miss it !!